While running the inital_atmosphere model executable, the program runs fine for nearly 18mintues and then simply stops and no error messages are made. My submission script allows for it to run up to 10hours so time should not be an issue. I have retried several times and it stops at the same spot each time. Do you have any recommendations for how/where to start troubleshooting?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

init_atmopshere stops

- Thread starter zmenzo

- Start date

This post was from a previous version of the WRF&MPAS-A Support Forum. New replies have been disabled and if you have follow up questions related to this post, then please start a new thread from the forum home page.

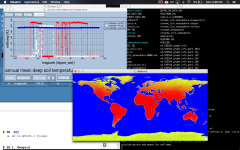

It looks like your screenshot is from an execution of the atmosphere_model program (i.e., the model itself) rather than the init_atmosphere_model program. The log output indicates that NaNs were present in the model velocity fields, which is likely the reason why the model eventually failed. Can you attach your namelist.atmosphere file? On which mesh are you running your simulation?

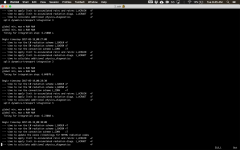

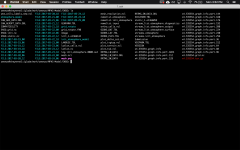

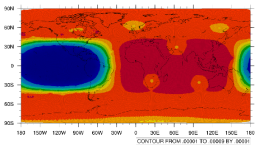

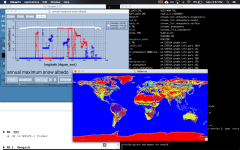

Since the last post, I have been attempting to troubleshoot this problem. I have finally even gone to the extreme of rebuilding the entire model and rerunning the same steps. Unfortunately, I am getting the same issue, but without the wind error you mentioned in the previous comment. It simply stops a few moments into running the init step and does not produce and error file. Knowing that this may be an issue, I capture each step via screenshots and TextEdit files and have attached them to this message. There were no errors present after creating the static.nc file. If you have any suggestions, I would be very grateful.

Attachments

Attachments1

Attachments

-

0.build_atmo.png675.3 KB · Views: 1,340

0.build_atmo.png675.3 KB · Views: 1,340 -

0.build_init.png556.8 KB · Views: 1,340

0.build_init.png556.8 KB · Views: 1,340 -

1.ENSO.mesh.png369.8 KB · Views: 1,341

1.ENSO.mesh.png369.8 KB · Views: 1,341 -

2.static_log.png481.5 KB · Views: 1,341

2.static_log.png481.5 KB · Views: 1,341 -

2.static_test.png741.4 KB · Views: 1,340

2.static_test.png741.4 KB · Views: 1,340 -

2.static_test2.png837.1 KB · Views: 1,341

2.static_test2.png837.1 KB · Views: 1,341 -

2.static_name.rtf1.5 KB · Views: 59

-

2.static_streams.rtf1.3 KB · Views: 59

-

3.init_log.rtf7.4 KB · Views: 62

-

3.init_name.rtf1.5 KB · Views: 60

The email for the failed batch script:

PBS Job Id: 1366142.chadmin1.ib0.cheyenne.ucar.edu

Job Name: init_1

Execution terminated

Exit_status=265

resources_used.cpupercent=2859

resources_used.cput=00:17:00

resources_used.mem=44449788kb

resources_used.ncpus=36

resources_used.vmem=2500381880kb

resources_used.walltime=00:00:30

PBS Job Id: 1366142.chadmin1.ib0.cheyenne.ucar.edu

Job Name: init_1

Execution terminated

Exit_status=265

resources_used.cpupercent=2859

resources_used.cput=00:17:00

resources_used.mem=44449788kb

resources_used.ncpus=36

resources_used.vmem=2500381880kb

resources_used.walltime=00:00:30

For a bit more information on memory usage, we've generally found that providing about 0.175 MB of memory per grid column (with 55 vertical layers) is adequate when running the model itself (i.e., the atmosphere_model program). The initialization program requires less memory because it allocates fewer fields; I don't have a good figure, but just guessing wildly, it may be that the init_atmosphere_model program requires only half the memory of the model. For a mesh with 535554 columns, then, you might want to ensure that you have at least 535554 * 0.175 MB = 93.7 GB of aggregate memory when running a model simulation, and the initialization of the model might require around 46 GB. Although most of the Cheyenne batch nodes have 64 GB of memory, not all of this is available to user processes. So, using at least a couple of nodes when creating the initial conditions, and using more nodes still when running the model is probably good.