Dear all,

I has faced to the problem with mpirun. I compile successful the WRF v4.2 in my cluster, and try test with wrf.exe, it's successful but the speed of running is too slow, 1:3 (take 2 hours to simulate for 6 hours).

My cluster has 2 nodes, 8 cores per each, RAM 6GB per node. I have tried

> mpirun -np 8 ./wrf.exe

or

> mpirun -np 16 ./wrf.exe

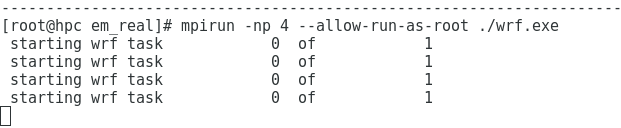

The running show only 1 processor used (as in the image below)

And an error come with the message:

mpirun noticed that process rank 0 with PID 0 on node hpc exited on signal 9 (Killed)

This error mean the memory of system is not enough, but when I run wrf.exe without mpirun, it used only 2GB out of 6GB.

Do you have any suggestions for me to overcome this problem?

Thanks

HC

I has faced to the problem with mpirun. I compile successful the WRF v4.2 in my cluster, and try test with wrf.exe, it's successful but the speed of running is too slow, 1:3 (take 2 hours to simulate for 6 hours).

My cluster has 2 nodes, 8 cores per each, RAM 6GB per node. I have tried

> mpirun -np 8 ./wrf.exe

or

> mpirun -np 16 ./wrf.exe

The running show only 1 processor used (as in the image below)

And an error come with the message:

mpirun noticed that process rank 0 with PID 0 on node hpc exited on signal 9 (Killed)

This error mean the memory of system is not enough, but when I run wrf.exe without mpirun, it used only 2GB out of 6GB.

Do you have any suggestions for me to overcome this problem?

Thanks

HC