I need to run a series of 3month simulations using the 60-3km mesh. While I can get the model to run successfully, it only produces 3day/12hr submission, which is much too slow and computationally expensive for my purposes. I have recently removed all unnecessary variables and have attempted to optimize the nodes:cpi:mpi ratio. While cutting the allocation requirement in half, I still need to make the model more efficient. Does anyone have any suggestions on how this may be done? Possibly reducing the time step in my namelist? Does the number of soundings I'm submitted make that big a difference? etc.? Any thoughts or suggestions would be greatly appreciated.

Thank you all.

Thank you all.

Attachments

-

namelist.atmosphere.txt1.7 KB · Views: 61

-

namelist.init_atmosphere.txt1.4 KB · Views: 50

-

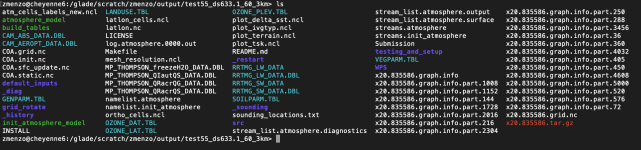

pwd.png285.4 KB · Views: 1,548

pwd.png285.4 KB · Views: 1,548 -

sounding_locations.txt255 bytes · Views: 49

-

stream_list.atmosphere.diagnostics.txt29 bytes · Views: 58

-

stream_list.atmosphere.output.txt92 bytes · Views: 49

-

streams.atmosphere.txt1.6 KB · Views: 51

-

streams.init_atmosphere.txt907 bytes · Views: 55

-

Submission.txt457 bytes · Views: 63