Hi all,

I know this is a commonly encountered and discussed issue in this forum but I haven't found a solution to this issue yet after much reading (related namelists, runscripts and error logs are attached).

What I am trying to do: Run WRF4.4.2 for 2023/07 and 2023/08 for 12, 4 and 1.33 km using PX-LSM (soil moisture turned on for first 10 days spin-up, will turn off on final run) and then use the outputs as inputs for WRF4.4.2-CMAQv5.5 coupled simulation.

What I have done so far:

1. Used relatively older WPS to create met_em files using NARR.

2. Used the met_em files, upper obs (ds351.0) and surface obs (ds461.0) data in obsgrid to create metoa_em* and wrfsfdda_d0* files for all 3 domains.

3. Using the metoa_em* and wrfsfdda_d0* files in real.exe to create boundary condition files (wrfbdy_d01, wrffdda_d0*, wrfinput_d0*, wrflowinp_d0*).

4. Using the boundary condition files to run WRF4.4.2.

The error:

d03 2023-06-21_00:00:06+02/03 in ACM PBL

-------------- FATAL CALLED ---------------

FATAL CALLED FROM FILE: <stdin> LINE: 415

RIBX never exceeds RIC, RIB(i,kte) = 0.00000000 THETAV(i,1) = NaN MOL= NaN TCONV = 0.229080185 WST = NaN KMIX = 35 UST = NaN TST = NaN U,V = NaN NaN I,J= 217 14

Trials to solve the issue:

1. Reduced and increased the time steps (doesn't work)

2. Turned off/commented out:

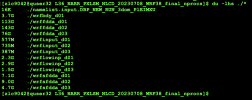

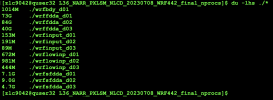

1. I have used the same metoa_em* and wrfsfdda_d0* files for all 3 domains to create boundary condition files in WRF3.8 real.exe and found that the BC files in WRF3.8 are much larger than those of WRF4.4.2 (see attached screenshots). WRF3.8 with basically the same namelist.input is running without any issues.

2. When I create metoa_em* files and run real.exe, the grid%tsk unreasonable error shows up in both WRF versions. As a workaround step, I have renamed the SKINTEMP variable in metoa_em* files as TSK and used them in for the WRF runs in 4.4.2 and 3.8 (3.8 wrf run works).

3. My concern (and possibly the reason of this issue) is why BC files in WRF4.4.2 would be are much smaller than those of WRF3.8 when created with the same metoa_em* and wrfsfdda_d0* files? To check if my WRF4.4.2 run directory is somehow missing something, I re-compiled a fresh directory of WRF4.4.2 but BC sizes are still smaller than WRF38 BC.

4. WRF4.4.2 ran for one simulation day (2023/06/21) and stopped at 2023/06/22.

Kindly help me fix this issue. Mentioning @kwerner @Ming Chen for their wisdom on this.

I know this is a commonly encountered and discussed issue in this forum but I haven't found a solution to this issue yet after much reading (related namelists, runscripts and error logs are attached).

What I am trying to do: Run WRF4.4.2 for 2023/07 and 2023/08 for 12, 4 and 1.33 km using PX-LSM (soil moisture turned on for first 10 days spin-up, will turn off on final run) and then use the outputs as inputs for WRF4.4.2-CMAQv5.5 coupled simulation.

What I have done so far:

1. Used relatively older WPS to create met_em files using NARR.

2. Used the met_em files, upper obs (ds351.0) and surface obs (ds461.0) data in obsgrid to create metoa_em* and wrfsfdda_d0* files for all 3 domains.

3. Using the metoa_em* and wrfsfdda_d0* files in real.exe to create boundary condition files (wrfbdy_d01, wrffdda_d0*, wrfinput_d0*, wrflowinp_d0*).

4. Using the boundary condition files to run WRF4.4.2.

The error:

d03 2023-06-21_00:00:06+02/03 in ACM PBL

-------------- FATAL CALLED ---------------

FATAL CALLED FROM FILE: <stdin> LINE: 415

RIBX never exceeds RIC, RIB(i,kte) = 0.00000000 THETAV(i,1) = NaN MOL= NaN TCONV = 0.229080185 WST = NaN KMIX = 35 UST = NaN TST = NaN U,V = NaN NaN I,J= 217 14

Trials to solve the issue:

1. Reduced and increased the time steps (doesn't work)

2. Turned off/commented out:

- ! sf_surface_mosaic = 1,

- ! mosaic_cat = 8,

- guv = 0.0003, 0.0001, 0.0000,

- gt = 0.0003, 0.0001, 0.0000,

- gq = 0.00001, 0.00001, 0.0000,

1. I have used the same metoa_em* and wrfsfdda_d0* files for all 3 domains to create boundary condition files in WRF3.8 real.exe and found that the BC files in WRF3.8 are much larger than those of WRF4.4.2 (see attached screenshots). WRF3.8 with basically the same namelist.input is running without any issues.

2. When I create metoa_em* files and run real.exe, the grid%tsk unreasonable error shows up in both WRF versions. As a workaround step, I have renamed the SKINTEMP variable in metoa_em* files as TSK and used them in for the WRF runs in 4.4.2 and 3.8 (3.8 wrf run works).

3. My concern (and possibly the reason of this issue) is why BC files in WRF4.4.2 would be are much smaller than those of WRF3.8 when created with the same metoa_em* and wrfsfdda_d0* files? To check if my WRF4.4.2 run directory is somehow missing something, I re-compiled a fresh directory of WRF4.4.2 but BC sizes are still smaller than WRF38 BC.

4. WRF4.4.2 ran for one simulation day (2023/06/21) and stopped at 2023/06/22.

Kindly help me fix this issue. Mentioning @kwerner @Ming Chen for their wisdom on this.

Attachments

-

Screen Shot 2025-03-09 at 5.33.01 PM.png187.1 KB · Views: 4

Screen Shot 2025-03-09 at 5.33.01 PM.png187.1 KB · Views: 4 -

Screen Shot 2025-03-09 at 5.33.44 PM.png169.9 KB · Views: 3

Screen Shot 2025-03-09 at 5.33.44 PM.png169.9 KB · Views: 3 -

rsl.errors.zip2.8 MB · Views: 1

-

namelist.input.txt7.5 KB · Views: 7

-

namelist.oa.txt2.9 KB · Views: 1

-

namelist.wps.txt2.1 KB · Views: 1

-

WRF4.4.2.runscript.txt2.6 KB · Views: 2