Dear WRF-Community,

at the moment I am trying to set up WRF up to a resolution of 40m. The simulation itself is running but looking at the results there might be still some problems.

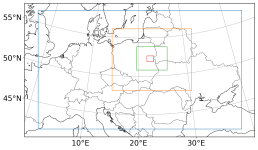

I first run D01-D04 (9km – 200m) in a single run and using this for running D05 using ndown with vertical refinement factor of 2, more details can be seen in the attached namelists.

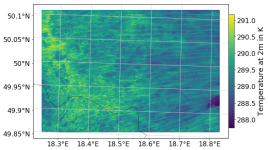

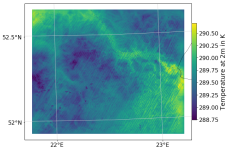

Looking at T2, Q2 and Wind in 10m height I am observing some kind of ‘stripes’ or wavelike structures as you can see for example for T2 in the attached image (07.06.2018, 7am, 2 hours after starting LES).

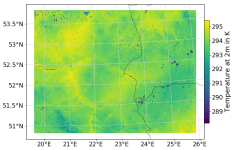

First guess was that the problems occur due to the high spatial resolution or the use of LES in this domain. But it showed up that these structures, but more broaden, also appear in my coarser domains, to be more precise they show up also in 1km and 200m case (3km and 9km seem to be ok), where I am not using LES (instead using Shin-Hong PBL). I already tried increasing vertical resolution as well as decreasing time step with no luck.

Does anyone has a hint what to try to get rid of these structures? If you need any further information about my set up do not hesitate to ask

Thanks a lot,

Sarah

P.S. I also look in this thread: http://forum.mmm.ucar.edu/phpBB3/viewtopic.php?f=58&t=5448 but I think the stripes in my case look a bit different and more 'wave like' so I think they are not created by the same reason? Also I am using WRF 4.1.1. and it seemed like this problem in Tians case occurred for <3.9.

at the moment I am trying to set up WRF up to a resolution of 40m. The simulation itself is running but looking at the results there might be still some problems.

I first run D01-D04 (9km – 200m) in a single run and using this for running D05 using ndown with vertical refinement factor of 2, more details can be seen in the attached namelists.

Looking at T2, Q2 and Wind in 10m height I am observing some kind of ‘stripes’ or wavelike structures as you can see for example for T2 in the attached image (07.06.2018, 7am, 2 hours after starting LES).

First guess was that the problems occur due to the high spatial resolution or the use of LES in this domain. But it showed up that these structures, but more broaden, also appear in my coarser domains, to be more precise they show up also in 1km and 200m case (3km and 9km seem to be ok), where I am not using LES (instead using Shin-Hong PBL). I already tried increasing vertical resolution as well as decreasing time step with no luck.

Does anyone has a hint what to try to get rid of these structures? If you need any further information about my set up do not hesitate to ask

Thanks a lot,

Sarah

P.S. I also look in this thread: http://forum.mmm.ucar.edu/phpBB3/viewtopic.php?f=58&t=5448 but I think the stripes in my case look a bit different and more 'wave like' so I think they are not created by the same reason? Also I am using WRF 4.1.1. and it seemed like this problem in Tians case occurred for <3.9.