oscarvdvelde

New member

I am running WRF practically out of the box on the Amazon cloud.

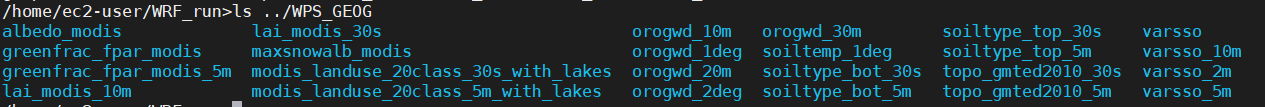

For sure I specified geog_data_res = "default,"2m","30s" for geographical features, and it seems I have the files for 30s in the WPS_GEOG directory.

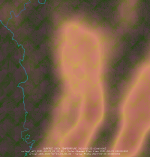

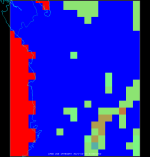

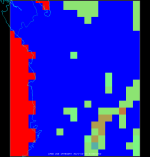

However, the model output in the 9 and 3 km domains over NW Colombia shows blocky 27 km-sized land use and sea mask features and heavily smoothed terrain height.

How could I improve this?

For sure I specified geog_data_res = "default,"2m","30s" for geographical features, and it seems I have the files for 30s in the WPS_GEOG directory.

However, the model output in the 9 and 3 km domains over NW Colombia shows blocky 27 km-sized land use and sea mask features and heavily smoothed terrain height.

How could I improve this?