Hello,

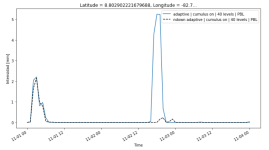

I have been trying to run a ndown case but when I execute wrf.exe with only domain 2, a cfl error stops the model after 40 seconds.

I don't know what is happening as I tried to run the same model configuration with two-way nesting and it worked. I can't find any inconsistencies or incompatibilities but maybe I'm missing something.

I attached the last namelist that has the configuration to run wrf.exe (namelist.input) and the prior one prepared to run prepared to run ndown.exe (namelist3.input, it's the same one I used to run real.exe for both domains but it has the variable io_form_auxinput2=2 when ndown.exe has to be run).

Thanks in advanced,

Jana.

I have been trying to run a ndown case but when I execute wrf.exe with only domain 2, a cfl error stops the model after 40 seconds.

I don't know what is happening as I tried to run the same model configuration with two-way nesting and it worked. I can't find any inconsistencies or incompatibilities but maybe I'm missing something.

I attached the last namelist that has the configuration to run wrf.exe (namelist.input) and the prior one prepared to run prepared to run ndown.exe (namelist3.input, it's the same one I used to run real.exe for both domains but it has the variable io_form_auxinput2=2 when ndown.exe has to be run).

Thanks in advanced,

Jana.