Hello,

I have been attempting to run WRF in central Chile, a region characterized by complex topography due to the Andes mountain range. I used three domains of 36, 12, and 4 km with time steps of 6dx, 4dx, and 3dx (216, 144, and 108 seconds, respectively). I am using the ERA5 dataset with 50 vertical layers. In all cases, upon reviewing the rsl.error and rsl.out files, I encounter messages like the following:

“rsl.error.0007

2000-06-13_21:23:24 MAX AT i,j,k: 97 62 17 vert_cfl,w,d(eta)= 2.01002645 -2.92913198 4.20067906E-02”

“rsl.error.0007

2000-06-13_21:25:12 1 points exceeded cfl=2 in domain d01 at time 2000-06-13_21:25:12 hours”

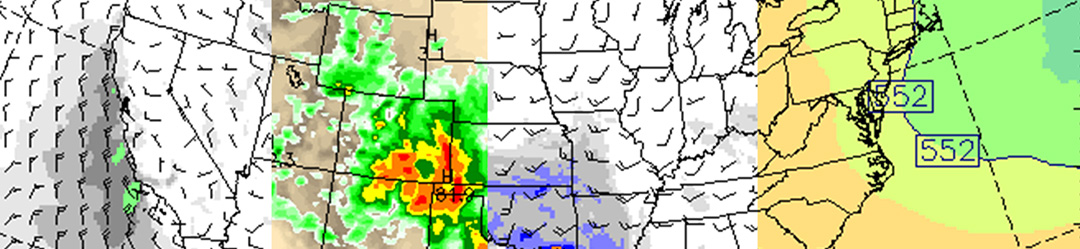

I have attached the following summary table:

Where dt is the time step, #CFL2(error) is the number of times when the CFL message appeared, CFL Maximo (error) is the max value of CFL in all rsl.error* files and #CFL>2: dom i, is the amount of time the CFL message appeared in domain i.

It is worth noting that all my simulations successfully complete the WRF execution, and precipitation results look reasonable, I only find these messages when analyzing the WRF outputs. In my search for solutions, I found various recommendations on the forum, such as:

How can I solve this problem? What would you recommend to avoid reducing the time step and prevent this instability issue?

I have been attempting to run WRF in central Chile, a region characterized by complex topography due to the Andes mountain range. I used three domains of 36, 12, and 4 km with time steps of 6dx, 4dx, and 3dx (216, 144, and 108 seconds, respectively). I am using the ERA5 dataset with 50 vertical layers. In all cases, upon reviewing the rsl.error and rsl.out files, I encounter messages like the following:

“rsl.error.0007

2000-06-13_21:23:24 MAX AT i,j,k: 97 62 17 vert_cfl,w,d(eta)= 2.01002645 -2.92913198 4.20067906E-02”

“rsl.error.0007

2000-06-13_21:25:12 1 points exceeded cfl=2 in domain d01 at time 2000-06-13_21:25:12 hours”

I have attached the following summary table:

Where dt is the time step, #CFL2(error) is the number of times when the CFL message appeared, CFL Maximo (error) is the max value of CFL in all rsl.error* files and #CFL>2: dom i, is the amount of time the CFL message appeared in domain i.

It is worth noting that all my simulations successfully complete the WRF execution, and precipitation results look reasonable, I only find these messages when analyzing the WRF outputs. In my search for solutions, I found various recommendations on the forum, such as:

- w_damping = 1: This option activates vertical velocity damping to reduce numerical noise in the vertical wind component.

- diff_6th_opt = 2: This setting applies sixth-order numerical diffusion to improve model stability and accuracy.

- damp_opt = 2: This parameter activates Rayleigh damping to help control gravity wave reflections at the model top boundary.

- epssm = 0.9: This value controls the sound waves that propagate vertically. If the model domain is over a complex terrain area with large topography gradients, it is recommended that this value be increased.

How can I solve this problem? What would you recommend to avoid reducing the time step and prevent this instability issue?