Hello,

This post is a follow-up on that one.

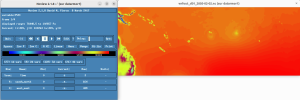

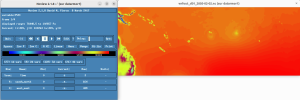

Despite no trace of errors in rsl* files from ndown.exe, it appears that its outputs are corrupted on some variables (PSFC & other pressures, Qvapor ...) but not on some others (T2, TSK, SST, U10, V10 - which unfortunately I focused on at first glance). To illustrate the problem, I attached screenshots from ncview, respectively of the wrfndi_d02 file (input to ndown) and wrfinput_d02 file (output of ndown) for the PSFC variable :

As one can see, the values of pressure in the ndown.exe output are far off and strangely structured.

The ndown input files - i.e. previous run's wrfout* - are all fine also :

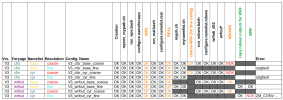

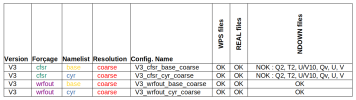

I'm still working on switching from MODIS to USGS... But is there anything else in the namelist, or in the variables list extracted from wrfinput and wrfout from previous coarse run (see attached files), which could be related to this problem ?

Here's a (filtered) diff between the variables lists :

FYI : I ran successfully the whole process (WPS/real/ndown/WRF) with another test-configuration based only on CFSR reanalisys, with the exact same namelist parameters for coarse and fine run, as well as same WRF version. So the problem seems to come up within ndown due to some incompatibilities between old coarse run's wrfout* (WRF 3.6.1) and my actual configuration (WRF 4.2.1).

This post is a follow-up on that one.

Despite no trace of errors in rsl* files from ndown.exe, it appears that its outputs are corrupted on some variables (PSFC & other pressures, Qvapor ...) but not on some others (T2, TSK, SST, U10, V10 - which unfortunately I focused on at first glance). To illustrate the problem, I attached screenshots from ncview, respectively of the wrfndi_d02 file (input to ndown) and wrfinput_d02 file (output of ndown) for the PSFC variable :

As one can see, the values of pressure in the ndown.exe output are far off and strangely structured.

The ndown input files - i.e. previous run's wrfout* - are all fine also :

I'm still working on switching from MODIS to USGS... But is there anything else in the namelist, or in the variables list extracted from wrfinput and wrfout from previous coarse run (see attached files), which could be related to this problem ?

Here's a (filtered) diff between the variables lists :

diff wrfinput_variables_list.txt wrfoutput_variables_list.txt | grep -v 'CEN\|DX\|DY\|WEST\|SOUTH\|LAT\|DZ\|I_P\|J_P\|STAND\|DT'

1,3c1,11

< :AERCU_FCT = 1.f ;

< :AERCU_OPT = 0 ;

< :AUTO_LEVELS_OPT = 2 ;

---

> :AER_ANGEXP_OPT = 1 ;

> :AER_ANGEXP_VAL = 1.3f ;

> :AER_AOD550_OPT = 1 ;

> :AER_AOD550_VAL = 0.12f ;

> :AER_ASY_OPT = 1 ;

> :AER_ASY_VAL = 0.f ;

> :AER_OPT = 0 ;

> :AER_SSA_OPT = 1 ;

> :AER_SSA_VAL = 0.f ;

> :AER_TYPE = 1 ;

10,11c18,22

---

> :BUCKET_J = -1.f ;

> :BUCKET_MM = -1.f ;

15,16c26,28

< IFF_6TH_SLOPEOPT = 0 ;

IFF_6TH_SLOPEOPT = 0 ;

< IFF_6TH_THRESH = 0.1f ;

IFF_6TH_THRESH = 0.1f ;

---

> FI_OPT = 0 ;

FI_OPT = 0 ;

> IFF_6TH_FACTOR = 0.12f ;

IFF_6TH_FACTOR = 0.12f ;

> IFF_6TH_OPT = 0 ;

IFF_6TH_OPT = 0 ;

18,24c30,33

< :ETAC = 0.f ;

---

> :FEEDBACK = 1 ;

28c37,38

< :GMT = 6.f ;

---

> :GMT = 0.f ;

> :GRAV_SETTLING = 0 ;

33,34d42

< :GWD_OPT = 0 ;

< :HYBRID_OPT = 0 ;

36,39c44,51

< :IDEAL_CASE = 0 ;

< :ISICE = 15 ;

< :ISLAKE = 21 ;

---

> :ICLOUD = 1 ;

> :ICLOUD_CU = 0 ;

> :ISFFLX = 1 ;

> :ISFTCFLX = 1 ;

> :ISHALLOW = 1 ;

> :ISICE = 24 ;

> :ISLAKE = -1 ;

41,44c53,56

< :ISURBAN = 13 ;

< :ISWATER = 17 ;

< :JULDAY = 33 ;

---

> :ISURBAN = 1 ;

> :ISWATER = 16 ;

> :JULDAY = 1 ;

51,52c63,66

< :MMINLU = "MODIFIED_IGBP_MODIS_NOAH" ;

---

> :MFSHCONV = 0 ;

> :MMINLU = "USGS" ;

> :MOIST_ADV_OPT = 1 ;

54,55c68,70

< :NUM_LAND_CAT = 21 ;

< ARENT_GRID_RATIO = 3 ;

ARENT_GRID_RATIO = 3 ;

---

> :NUM_LAND_CAT = 24 ;

> :OBS_NUDGE_OPT = 0 ;

> ARENT_GRID_RATIO = 5 ;

ARENT_GRID_RATIO = 5 ;

58a74,75

60a78,79

> :SCALAR_ADV_OPT = 2 ;

> :SCALAR_PBLMIX = 0 ;

64d82

< :SF_SURFACE_MOSAIC = 0 ;

69,73c87,92

---

> :SHCU_PHYSICS = 2 ;

> :SMOOTH_OPTION = 2 ;

77,78c96,98

< :START_DATE = "2005-02-02_06:00:00" ;

---

> :START_DATE = "2005-01-01_00:00:00" ;

> :STOCH_FORCE_OPT = 0 ;

80,81c100,105

< :TITLE = " OUTPUT FROM REAL_EM V4.2.1 PREPROCESSOR" ;

---

> :SWINT_OPT = 0 ;

> :SWRAD_SCAT = 1.f ;

> :TITLE = " OUTPUT FROM WRF V3.6.1 MODEL" ;

> :TKE_ADV_OPT = 2 ;

> :TRACER_PBLMIX = 1 ;

83,88c107,110

< :USE_MAXW_LEVEL = 0 ;

< :USE_THETA_M = 0 ;

< :USE_TROP_LEVEL = 0 ;

---

> :W_DAMPING = 0 ;

1,3c1,11

< :AERCU_FCT = 1.f ;

< :AERCU_OPT = 0 ;

< :AUTO_LEVELS_OPT = 2 ;

---

> :AER_ANGEXP_OPT = 1 ;

> :AER_ANGEXP_VAL = 1.3f ;

> :AER_AOD550_OPT = 1 ;

> :AER_AOD550_VAL = 0.12f ;

> :AER_ASY_OPT = 1 ;

> :AER_ASY_VAL = 0.f ;

> :AER_OPT = 0 ;

> :AER_SSA_OPT = 1 ;

> :AER_SSA_VAL = 0.f ;

> :AER_TYPE = 1 ;

10,11c18,22

---

> :BUCKET_J = -1.f ;

> :BUCKET_MM = -1.f ;

15,16c26,28

<

<

---

>

>

>

18,24c30,33

< :ETAC = 0.f ;

---

> :FEEDBACK = 1 ;

28c37,38

< :GMT = 6.f ;

---

> :GMT = 0.f ;

> :GRAV_SETTLING = 0 ;

33,34d42

< :GWD_OPT = 0 ;

< :HYBRID_OPT = 0 ;

36,39c44,51

< :IDEAL_CASE = 0 ;

< :ISICE = 15 ;

< :ISLAKE = 21 ;

---

> :ICLOUD = 1 ;

> :ICLOUD_CU = 0 ;

> :ISFFLX = 1 ;

> :ISFTCFLX = 1 ;

> :ISHALLOW = 1 ;

> :ISICE = 24 ;

> :ISLAKE = -1 ;

41,44c53,56

< :ISURBAN = 13 ;

< :ISWATER = 17 ;

< :JULDAY = 33 ;

---

> :ISURBAN = 1 ;

> :ISWATER = 16 ;

> :JULDAY = 1 ;

51,52c63,66

< :MMINLU = "MODIFIED_IGBP_MODIS_NOAH" ;

---

> :MFSHCONV = 0 ;

> :MMINLU = "USGS" ;

> :MOIST_ADV_OPT = 1 ;

54,55c68,70

< :NUM_LAND_CAT = 21 ;

<

---

> :NUM_LAND_CAT = 24 ;

> :OBS_NUDGE_OPT = 0 ;

>

58a74,75

60a78,79

> :SCALAR_ADV_OPT = 2 ;

> :SCALAR_PBLMIX = 0 ;

64d82

< :SF_SURFACE_MOSAIC = 0 ;

69,73c87,92

---

> :SHCU_PHYSICS = 2 ;

> :SMOOTH_OPTION = 2 ;

77,78c96,98

< :START_DATE = "2005-02-02_06:00:00" ;

---

> :START_DATE = "2005-01-01_00:00:00" ;

> :STOCH_FORCE_OPT = 0 ;

80,81c100,105

< :TITLE = " OUTPUT FROM REAL_EM V4.2.1 PREPROCESSOR" ;

---

> :SWINT_OPT = 0 ;

> :SWRAD_SCAT = 1.f ;

> :TITLE = " OUTPUT FROM WRF V3.6.1 MODEL" ;

> :TKE_ADV_OPT = 2 ;

> :TRACER_PBLMIX = 1 ;

83,88c107,110

< :USE_MAXW_LEVEL = 0 ;

< :USE_THETA_M = 0 ;

< :USE_TROP_LEVEL = 0 ;

---

> :W_DAMPING = 0 ;

FYI : I ran successfully the whole process (WPS/real/ndown/WRF) with another test-configuration based only on CFSR reanalisys, with the exact same namelist parameters for coarse and fine run, as well as same WRF version. So the problem seems to come up within ndown due to some incompatibilities between old coarse run's wrfout* (WRF 3.6.1) and my actual configuration (WRF 4.2.1).

Attachments

Last edited: