Hi,

I am using WRF 4.1.3 to downscale hourly ERA5 data with a grid ratio of 1:3 (30 km, 10 km, 3.33 km, 1.11 km). I did some test runs to analyze the sensitivity of WRF towards the length of the simulation. The test runs have the exact same configuration and physics parameters and only differ in the length.

- Test 1: Run for 7 days (with 12 hours spin-up time)

- Test 2: Run for 1 day each (36 hours, with 12 hours spin-up time each)

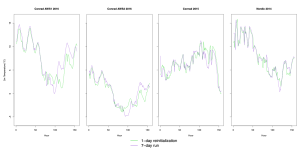

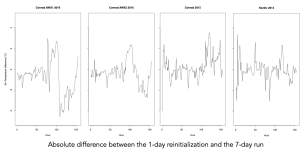

Both tests ran with the same number of processors and nodes. The results show the same overall pattern, e.g. for temperature in degrees Celsius, but they are still quite different, with up to 4 degrees temperature difference per hour. Also, I cannot see a clear pattern that would show me that my spin-up time is not long enough. I attached the time series for both test 1 and test 2, as well as the absolute difference for temperature for four different locations of weather stations. It seems that the deviation between test 1 and test 2 is growing over the simulation time, however, this trend is not clear for all weather stations. As I am using reanalysis data, it should not be necessary to do a reinitialization of WRF every day, so I should be fine with running WRF for a month or a year.

Could you please let me know why there is this relatively large difference between the two test runs that only differ in the length of the simulation (minus the spin-up time)? Is this behaviour to be expected, or am I missing something? Any help would be appreciated.

Thanks!

I am using WRF 4.1.3 to downscale hourly ERA5 data with a grid ratio of 1:3 (30 km, 10 km, 3.33 km, 1.11 km). I did some test runs to analyze the sensitivity of WRF towards the length of the simulation. The test runs have the exact same configuration and physics parameters and only differ in the length.

- Test 1: Run for 7 days (with 12 hours spin-up time)

- Test 2: Run for 1 day each (36 hours, with 12 hours spin-up time each)

Both tests ran with the same number of processors and nodes. The results show the same overall pattern, e.g. for temperature in degrees Celsius, but they are still quite different, with up to 4 degrees temperature difference per hour. Also, I cannot see a clear pattern that would show me that my spin-up time is not long enough. I attached the time series for both test 1 and test 2, as well as the absolute difference for temperature for four different locations of weather stations. It seems that the deviation between test 1 and test 2 is growing over the simulation time, however, this trend is not clear for all weather stations. As I am using reanalysis data, it should not be necessary to do a reinitialization of WRF every day, so I should be fine with running WRF for a month or a year.

Could you please let me know why there is this relatively large difference between the two test runs that only differ in the length of the simulation (minus the spin-up time)? Is this behaviour to be expected, or am I missing something? Any help would be appreciated.

Thanks!