meteoadriatic

Member

Hi, from all tests you have done, at this point, I would be pretty surprised if it comes out that the reason for simulation failures is not the PBL choice. It seems we will know soon  Good luck!

Good luck!

Hi Meteoadriatic,Hi, from all tests you have done, at this point, I would be pretty surprised if it comes out that the reason for simulation failures is not the PBL choice. It seems we will know soonGood luck!

Hi Meteoadriatic,Hello,

Well, I'm surprised to hear this.

I'm not sure that radiation scheme is the source of the problem; might be but also might not - it might crash because of unrealistic state of the model at that particular point in time. And that is the way to go with debugging I think. Can you set wrfout frequency so that it dumps model state right before the crash, the closer to the crash, the better, and then look into it and see if there is anything unrealistic? That might guide you toward the solution.

For such small grids, instead of YSU, you would probably be better with scale aware schemes (Shin-Hong or SMS-3DTKE) or LES setup.

Hello,

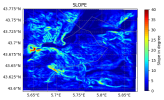

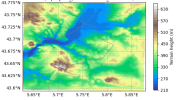

Oh, in that case the too steep slope could very likely be the cause of crashes and smoothing will help!

Hi,Hello,

I think that 40 degree slope is too much. From literature it looks it depends on vertical distance between model levels, too:

"These predictions nearly match what is found in practice by trial and error: one needs dz > 32 m for max slope = 42° and dz > 25 m for max slope = 28° to keep the WRF model numerically stable (see Section 3.3)."

You defined eta levels yourself, see real output to check how dense they are, that is, what is the mininum vertical distance between your two nearest levels. And compare with quoted findings in that paper ....

Maybe this helps!

RRTMG LW CLWRF interpolated GHG values year: 2017 julian day: 51.26451

co2vmr: 4.062334790387511E-004 n2ovmr: 3.284720907739753E-007

ch4vmr: 1.864612663469318E-006 cfc11vmr: 3.065397540727884E-010

cfc12vmr: 4.977012290951540E-010

Caught signal 11 (Segmentation fault: address not mapped to object at address 0xffffffe02f2fb560)At this point, I would like to summarize the steps I have taken so far:

Initial Issue

I initially encountered a segmentation fault while using the adaptive time step, even with small target CFL values and other recommended parameters. The error occurred at the time step (every 10 minutes in this case) of the long-wave radiation scheme (RRTMG). A typical error message looked like this:

Code:RRTMG LW CLWRF interpolated GHG values year: 2017 julian day: 51.26451 co2vmr: 4.062334790387511E-004 n2ovmr: 3.284720907739753E-007 ch4vmr: 1.864612663469318E-006 cfc11vmr: 3.065397540727884E-010 cfc12vmr: 4.977012290951540E-010 Caught signal 11 (Segmentation fault: address not mapped to object at address 0xffffffe02f2fb560)

This issue occurred primarily with my initial setup, which included:

- 3 domains with a 1:9 nesting ratio (9 km / 1 km / 111.111 m)

- QNSE PBL physics

- Corine Land Cover land use with a custom table for compatibility

- WRF version 4.4.2

Steps Taken to Address the Issue

1. Standardizing Domain Ratios and Setup

I adopted a 1:3 domain ratio with 5 domains (9 km / 3 km / 1 km / 333 m / 111 m) as per WRF recommendations.

Despite these changes, the model still crashed for the same reason after producing 9 hours of output.

- Switched to WRF version 4.6.1

- Replaced Corine Land Cover with USGS default land use (24 classes)

2. Adjusting Radiation Time Step and Adding w_damping

However, the simulation still failed for the same reason, producing less than 9 hours of output.

- Set the radiation time step (radt) to 9 minutes instead of 10.

- Experimented with various other radt values and activated the w_damping option.

3. Changing PBL Scheme

This approach also proved unsuccessful.

- Removed QNSE and tested the YSU PBL scheme.

- This resolved the segmentation fault but introduced a new issue: instabilities due to CFL errors, as detected in the rsl files, even with a small target CFL of 0.2.

4. Smoothing Topography

I applied topography smoothing and observed an improvement: the simulation ran for 30 hours instead of the usual 9 hours. However, the same segmentation fault eventually returned.

5. Adjusting Node Configuration

I tested running the simulation on a single node with full memory allocation. This resulted in a different issue:

- A segmentation fault occurred in the cumulus scheme (cu_physics), resembling the RRTMG LW segmentation fault.

6. Testing New Configurations

I am now exploring the following:

- Disabling PBL parameterization entirely (bl_pbl_physics = 0) and using km_opt = SMS-3DTKE, both with and without topography smoothing.

- Applying additional smoothing to the topography and revisiting the original setup with QNSE PBL active.

For now, the only thing that help but with expensive cost for computation time, is using small constant time step (turn off adaptive time step), typically of 5-10s for first domain. But this is potentially not universal and based on different period it can probably give back the same error. I was arriving wth my initial setup thought.

**I used chatgpt to restructure the ideas and have good listing.**

Hi,Good day,

Have you tried to use default vertical level spacing, i.e. commenting out eta_levels(1:46) entry?

the same question bro... The simulation stop at 3 hours without any error message...I decided to stop using PBL parameterization and instead switched to the SMS-3DTKE scheme. However, similar to when I changed the PBL parameterization in the past (e.g., with YSU), I encountered the same issue: the simulation abruptly stops at the 3-hour mark without producing a segmentation fault or error message.

In contrast to the earlier tests with PBL schemes like YSU, this time there are no CFL-related errors, even though I’ve kept the target CFL value low (0.2). This makes the root cause less clear.

So far, neither turbulence nor PBL parameterization seems to resolve the original issue. In fact, switching to the SMS-3DTKE scheme appears to have made the problem worse.