Hello there,

This post is tangentially related to a previous post I made (found here). In that post I detailed how the ERA5 data I was using showed the Guadeloupe, Dominica, Martinique, etc. islands within the SSTs (being 0 Kelvin) but did not appear in the landmask.

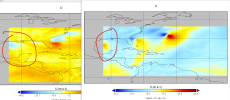

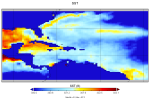

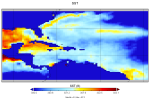

After being stumped on how to fix this, I got around it through python altering the met_em SSTs for those few cells at each timestep. I pulled the average value of the surrounding cells and set the islands' SSTs from 0 Kelvin to the average. Below are two images showing this as an example. (Left is the pre-altered met_em SST, right is the altered one)

I altered the SSTs the way I did because the landmask/landsea thinks it's water and that spot so close to Puerto Rico (the research area) of 0 Kelvin kept showing up, impacting other variables such as T2 and Skintemp.

That's all for context. I altered the met_ems, ran real, and got the simulation running with no snafus. The real trouble I'm having now is figuring out why 4 years into the simulation (2013-2018 is the full run. It breaks in 2017) it breaks. It breaks on 08/25/2017

I can't find a CFL error, I can't find any error in the rsl.* files. I do however think it's tied to my manipulation of the SSTs because when I tried redoing the WPS process, I did not alter the met_ems in any way and the simulation ran fine. When I alter the SSTs it breaks at the same point every time.

I don't understand why it's breaking now after running the previous years just fine. I've also run plenty of other simulations with altered SSTs and had no problems so this feels very strange to me. Are there other variables that the SSTs might be tied to that I didn't consider? I should also say that this simulation is in wrf3.8. I've attached my namelist and some of the rsl_out files but couldn't really upload many as even compressed they were too large, but if it's necessary I can find a workaround. Same for the met_ems themselves if interested in comparing those.

Thanks for any help or information that can be provided.

This post is tangentially related to a previous post I made (found here). In that post I detailed how the ERA5 data I was using showed the Guadeloupe, Dominica, Martinique, etc. islands within the SSTs (being 0 Kelvin) but did not appear in the landmask.

After being stumped on how to fix this, I got around it through python altering the met_em SSTs for those few cells at each timestep. I pulled the average value of the surrounding cells and set the islands' SSTs from 0 Kelvin to the average. Below are two images showing this as an example. (Left is the pre-altered met_em SST, right is the altered one)

I altered the SSTs the way I did because the landmask/landsea thinks it's water and that spot so close to Puerto Rico (the research area) of 0 Kelvin kept showing up, impacting other variables such as T2 and Skintemp.

That's all for context. I altered the met_ems, ran real, and got the simulation running with no snafus. The real trouble I'm having now is figuring out why 4 years into the simulation (2013-2018 is the full run. It breaks in 2017) it breaks. It breaks on 08/25/2017

I can't find a CFL error, I can't find any error in the rsl.* files. I do however think it's tied to my manipulation of the SSTs because when I tried redoing the WPS process, I did not alter the met_ems in any way and the simulation ran fine. When I alter the SSTs it breaks at the same point every time.

I don't understand why it's breaking now after running the previous years just fine. I've also run plenty of other simulations with altered SSTs and had no problems so this feels very strange to me. Are there other variables that the SSTs might be tied to that I didn't consider? I should also say that this simulation is in wrf3.8. I've attached my namelist and some of the rsl_out files but couldn't really upload many as even compressed they were too large, but if it's necessary I can find a workaround. Same for the met_ems themselves if interested in comparing those.

Thanks for any help or information that can be provided.