Hi all,

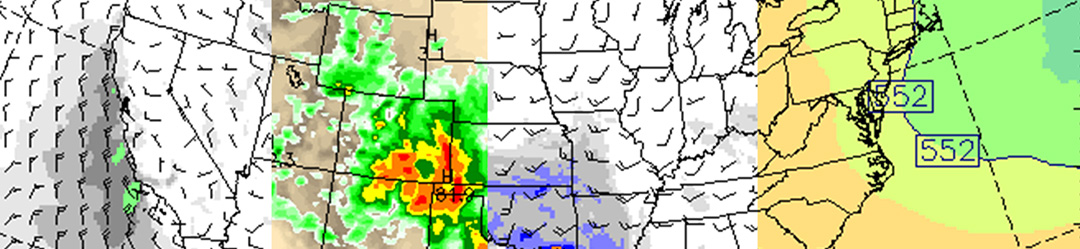

I posted on here with an issue getting WRF 4.5.2 to run (crashing) on Derecho with a very large domain. I'm working with a domain of nx,ny = 3551, 2441. Are there expected limitations to WRF's ability to scale up to a domain of this size?

My original setup was:

module load intel-classic/2023.0.0

module load ncarcompilers/1.0.0

module load cray-mpich/8.1.25

module load craype/2.7.20

module load netcdf-mpi/4.9.2

And configure option #50 as was recommended to me on this thread. I tried many values of "select" to get more CPUs (from 1024 up to 2304) and it kept failing. I have therefore been trying different configure options, including 24, 15.

Most recently, I've been trying config option #78 (Intel oneAPI), since the latest WRF version is supposed to accommodate this. I therefore did module load intel-oneapi/2023.0.0 before compiling. It failed to compile. I'm attaching the text write-out from that compile command. Any ideas what's going wrong here?

I really need to get these runs going in support of an ongoing virtual field campaign, so, huge appreciation in advance if anyone has some insights.

Cheers, James

I posted on here with an issue getting WRF 4.5.2 to run (crashing) on Derecho with a very large domain. I'm working with a domain of nx,ny = 3551, 2441. Are there expected limitations to WRF's ability to scale up to a domain of this size?

My original setup was:

module load intel-classic/2023.0.0

module load ncarcompilers/1.0.0

module load cray-mpich/8.1.25

module load craype/2.7.20

module load netcdf-mpi/4.9.2

And configure option #50 as was recommended to me on this thread. I tried many values of "select" to get more CPUs (from 1024 up to 2304) and it kept failing. I have therefore been trying different configure options, including 24, 15.

Most recently, I've been trying config option #78 (Intel oneAPI), since the latest WRF version is supposed to accommodate this. I therefore did module load intel-oneapi/2023.0.0 before compiling. It failed to compile. I'm attaching the text write-out from that compile command. Any ideas what's going wrong here?

I really need to get these runs going in support of an ongoing virtual field campaign, so, huge appreciation in advance if anyone has some insights.

Cheers, James